If your keyboard was actually working, you pressed a key. If it was not working, you went to get new keyboard. What is “not thought through” about that?

- 0 Posts

- 91 Comments

0·1 month ago

0·1 month agowe are talking about cheap home dsl routers here. i think that extent of the damage here is that someone has to revert to offline porn.

1·1 month ago

1·1 month agowhich would be bad for the world if people in the competing nations didn’t have internet access

these people would find out that there is such thing as fm radio. and that lot of their phones is capable of receiving it.

11·1 month ago

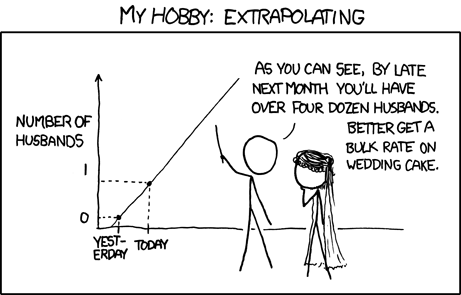

11·1 month agooh sure. when someone says “you can’t just blindly extrapolate a curve”, there must be some conspiracy behind it, it absolutely cannot be because you can’t just blindly extrapolate a curve 😂

1·1 month ago

1·1 month agoAI made those discoveries. Yes, it is true that humans made AI, so in a way, humans made the discoveries, but if that is your take, then it is impossible for AI to ever make any discovery.

if this is your take, then lot of keyboard made a lot of discovery.

AI could make a discovery if there was one (ai). there is none at the moment, and there won’t be any for any foreseeable future.

tool that can generate statistically probable text without really understanding meaning of the words is not an intelligence in any sense of the word.

your other examples, like playing chess, is just applying the computers to brute-force through specific mundane task, which is obviously something computers are good at and being used since we have them, but again, does not constitute a thinking, or intelligence, in any way.

it is laughable to think that anyone knows where the current rapid trajectory will stop for this new technology, and much more laughable to think we are already at the end.

it is also laughable to assume it will just continue indefinitely, because “there is a trajectory”. lot of technology have some kind of limit.

and just to clarify, i am not some anti-computer get back to trees type. i am eager to see what machine learning models will bring in the field of evidence based medicine, for example, which is something where humans notoriously suck. but i will still not call it “intelligence” or “thinking”, or “making a discovery”. i will call it synthetizing so much data that would be humanly impossible and finding a pattern in it, and i will consider it cool result, no matter what we call it.

1·1 month ago

1·1 month agoIn general, “The technology is young and will get better with time” is not just a reasonable argument, but almost a consistent pattern. Note that XKCD’s example is about events, not technology.

yeah, no.

try to compare horse speed with ford t and blindly extrapolate that into the future. look at the moore’s law. technology does not just grow upwards if you give it enough time, most of it has some kind of limit.

and it is not out of realm of possibility that llms, having already stolen all of human knowledge from the internet, having found it is not enough and spewing out bullshit as a result of that monumental theft, have already reached it.

that may not be the case for every machine learning tool developed for some specific purpose, but blind assumption it will just grow indiscriminately, because “there is a trend”, is overly optimistic.

11·1 month ago

11·1 month agowill therefore never be good enough?

no one said that. but someone did try to reject the fact it is demonstrably bad right now, because “there is a trajectory”.

1·1 month ago

1·1 month agoI appreciate the XKCD comic, but I think you’re exaggerating that other commenter’s intent.

i don’t think so. the other commenter clearly rejects the critic(1) and implies that existence of upward trajectory means it will one day overcome the problem(2).

while (1) is well documented fact right now, (2) is just wishful thinking right now.

hence the comic, because “the trajectory” doesn’t really mean anything.

31·1 month ago

31·1 month agoFor example, AI has discovered

no, people have discovered. llms were just a tool used to manipulate large sets of data (instructed and trained by people for the specific task) which is something in which computers are obviously better than people. but same as we don’t say “keyboard made a discovery”, the llm didn’t make a discovery either.

that is just intentionally misleading, as is calling the technology “artificial intelligence”, because there is absolutely no intelligence whatsoever.

and comparing that to einstein is just laughable. einstein understood the broad context and principles and applied them creatively. llm doesn’t understand anything. it is more like a toddler watching its father shave and then moving a lego piece accross its face pretending to shave as well, without really understaning what is shaving.

1·1 month ago

1·1 month agoThere is a good chance that it is instrumental in discoveries that lead to efficient clean energy

There is exactly zero chance… LLMs don’t discover anything, they just remix already existing information. That is how it works.

9·1 month ago

9·1 month agoSeeing the trajectory is not ultimate answer to anything.

4·2 months ago

4·2 months agoThe Atlantic | Neal Stephenson’s Most Stunning Prediction

The sci-fi legend coined the term metaverse. But he was most prescient about our AI age. By Matteo Wong

1·2 months ago

1·2 months agoHave you heard of “pedestrian controlled” trucks

they are called forklifts and they are around for quite some time now 😆

30·2 months ago

30·2 months agobecause blocked is considered good and federated bad… i assume.

1·2 months ago

1·2 months agomight be. might also be miui thing, i don’t know.

the fact remains that (android does x) does not equal to (some subset of android does x)

edit: seems the function was added in adroid 12 - https://arstechnica.com/gadgets/2023/06/uk-police-blame-android-for-record-number-of-false-emergency-calls/

which means that as of right now it is available to 60% of android users - https://gs.statcounter.com/android-version-market-share/mobile/worldwide/

21·2 months ago

21·2 months agoWhich Android version are you on?

10 qkq1.190910.002

44·2 months ago

44·2 months agoRight, correcting your incorrect information is “weird flex”. What are you, five?

On my Mi Max 3 it does not work as well. In “configure buttons” section of menu there is no call emergency number action, neither is there press [any button] five times trigger available. So clearly the function your phone has is not universal. What a wild world do we live in!

141·7 months ago

141·7 months agowell, doom was true 3d, but more importantly, doom had network game, so people have lot of fond memories from those early lan parties.

but it was really wolfenstein, with its pseudo-3d that squeezed maximum possible from the cpu power of the computers back then and… started it all, including the fame of id soft. id soft was responsible for both - after success of wolfenstein, they created doom (and ultimately quake).

what?