/ As of Jan 2034, 512KiB is determined to be the perfect blksize to minimize system call overhead across most systems.

as of Jan 2034 there are no humans so thus we can discontinue tools for humans

Feb 2034: grammer correction: there are no humans.

The I/O size is a reason why it’s better to use cp than dd to copy an ISO to a USB stick. cp automatically selects an I/O size which should yield good performance, while dd’s default is extremely small and it’s necessary to specify a sane value manually (e.g. bs=1M).

With “everything” being a file on Linux, dd isn’t really special for simply cloning a disk. The habit of using dd is still quite strong for me.

Interesting. Is this serious advice and if so, what’s the new canonical command to burn an ISO?

Recently, I learned that booting from a dd’d image is actually a major hack. I don’t get it together on my own, but has something to do with no actual boot entry and partition table being created. Because of this, it’s better to use an actual media creation tool such as Rufus or balena etcher.

Found the superuser thread: https://superuser.com/a/1527373 Someone had linked it on lemmy

I was not aware, thanks for the link!

Wow. I’ve been using dd for years and I’d consider myself on the more experienced end of the Linux user base. I’ll use cp from now on. Great link.

Thanks for the tip. Not that I plan to read up on the matter and make the next cold installation even more anxiety-inducing that it already is. Sometimes Linux would really benefit if there were One Correct Way to do things, I find. Especially something so critical as this.

There is. Just use a media creation tool, like Rufus. dd’ing onto a drive is a hack.

Huh thanks for the link. I knew that just dd’ing doesn’t work for windows Isos but I didn’t know that it was the Linux distros doing the weird shenanigans this time around

It is really informative! Spread the word.

As I understand it, there isn’t really a canonical way to burn an ISO. Any tool that copies a file bit for bit to another file should be able to copy a disk image to a disk. Even shell built-ins should do the job. E.g.

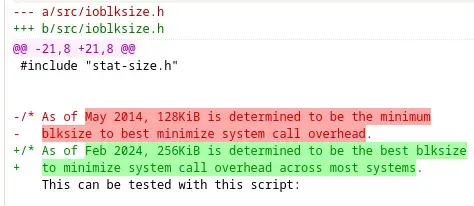

cat my.iso > /dev/myusbstickreads the file and puts it on the stick (which is also a file). Cat reads a file byte for byte (test by cat’ing any binary) andredirects the output to another file, which can be a device file like a usb stick.There’s no practical difference between those tools, besides how fast they are. E.g. dd without the block size set to >=1K is pretty slow [1], but I guess most tools select large enough I/O sizes to be nearly identical (e.g. cp).

[1] https://superuser.com/questions/234199/good-block-size-for-disk-cloning-with-diskdump-dd#234204

For those that like the status bar dd provides, I highly recommend pv

pv diskimage.iso > /dev/usb

Do cp capture the raw bits like dd does?

Not saying that its useful in the case you’re describing but that’s always been the reason I use it. When I want every bit on a disk copied to another disks, even the things with no meta data present.

As long as you copy from the device file (

/dev/whatever), you will get “the raw bits”, regardless of whether you usedd,cp, or evencat.

For me, I only got to 5 Gbps or so on my NVMe using dd and fine tuning bs=. In second place there was steam with ~3 Gbps. The same thing with my 1 Gbps USB Stick. Even though the time I saved by more speed is more than made up by the time it took me to fine tune.

Sorry for the nitpick, but you probably mean GB/s (or GiB/s, but I won’t go there). Gbps is gigabits per second, not gigabytes per second.

Since both are used in different contexts yet they differ by about a factor of 8, not confusing the two is useful.

This is a good example of how most of the performance improvements during a rewrite into a new language come from the learnings and new codebase, over the languages strengths.

Btw do you know how uutils (rust rewrite of GNU coreutils) is doing?

Wrong license still but I just read an article saying they are making progress on compatiabity with the GNU coreutils

What means wrong license? Does it need to be GPL(v3)?

Ideally, moving away from copyleft is step backwards for user tools