Well at least we got a backup, right?

Right???

It last ran a week ago and we technically haven’t tested it. Just our hot replicas which also just deleted all that data.

And of course by now every downstream system replicated AND CACHED that data.

Holy shit the truth with replication deleting the data you needed too true lmao

I felt a slight jolt of adrenaline on that second “right???” After living through something similar…

ah the cold sweat and clenching of the anus

Backup? What is this backup you speak of?

This is what we in the industry refer to as a “big oof.”

I thing the technical term for this is an RGE.

(Resume Generating Event)

But it’s only, like, a handful of rows 🙃

Must be a technical term.

It’s a good way to wake yourself up in the morning

Doctors HATE this one simple trick! Lose up to 100% of MyChart data - and KEEP it off!

Can help reduce blood pressure, high cholesterol, weight, height, gender, name and more to NULL! Wake up feeling NULL and NULL!

For everyone’s sanity, please restrict access to the prod DB to like two people. No company wants that to happen to them, and no developer wants to do that.

Me applying for any database access ever: “read only. I do not want write. READ ONLY.”

Datagrip has an option, and likely other database IDEs do as well - “Connect as READONLY”. Makes me feel a little safer

deleted by creator

I don’t use readonly with dbeaver, but I do have the prod servers set to automatically make transactions and have to hit a button to commit. Almost certain it asks confirmation that I want to make the changes to prod which is nice too (I rarely have to touch our sql server prod)

Just a funny story. All of our devs and even BAs used to have prod access. We all knew this was a bad idea and put in a process of hiring a DBA.

I think in the first two weeks the DBA screwed up prod twice. I can’t remember the first mess up but the second he had a lock on the database and then went to lunch.

We eventually hired two awesome DBAs to replace that one but oh boy.

Imagine being hired to help prevent people from fucking something up, only to fuck that thing up in your first week—not once, but twice. You’d think after the first time it wouldn’t happen again…

8388409 = 2^23 - 199

I may have noticed this on a certain other aggregator site once upon a time, but I’m still none the wiser as to why.

199 rows kind of makes sense for whatever a legitimate query might have been, but if you’re going to make up a number, why 2^23? Why subtract? Am I metaphorically barking up the wrong tree?

Is this merely a mistyping of 8388608 and it was supposed to be ±1 row? Still the wrong (B-)tree?

WHY DO I CARE

Are you Ramanujam reborn or a nerd who put every number they found on wolfram alpha?

In a place for programmer humour, you’ve got to expect there’s at least one person who knows their powers of two. (Though I am missing a few these days).

As for considering me to be Ramanujan reborn, if there’s any of Srinivasa in here, he’s not been given a full deck to work with this time around and that’s not very karmic of whichever deity or deities sent him back.

I know up to like 2^16 or maybe 2^17 while sufficiently caffeinated. Memorizing up to, or beyond, 2^23 is nerd award worthy.

I know that 2^20 is something more that a million because is the maximum number of rows excel can handle.

For me it’s: 2^1 to 2^16 (I remember the 8-bit era), a hazy gap and then 2^24 (the marketing for 24 bit colour in the 90s had 16777216 plastered all over it). Then it’s being uncomfortably lost up to 2^31 and 2^32, which I usually recognise when I see them (hello

INT_MAXandUINT_MAX), but I don’t know their digits well enough to repeat. 2^64 is similar. All others are incredibly vague or unknown.2^23 as half of 2^24 and having a lot of 8s in it seems to have put it into the “recognisable” category for me, even if it’s in that hazy gap.

So I grabbed a calculator to confirm.

Ramanujan reborn - the main protagonist from the Wheel of Maths books.

deleted by creator

And you can save a bunch of time by inlining all this into one query

Why update before select? Shouldn’t it be the other way around? (I’m clueless. )

The select after the update is to check if the update went through properly. You can have more selects before the update if you wanted to.

The four horsemen of the datapocalypse

deleted by creator

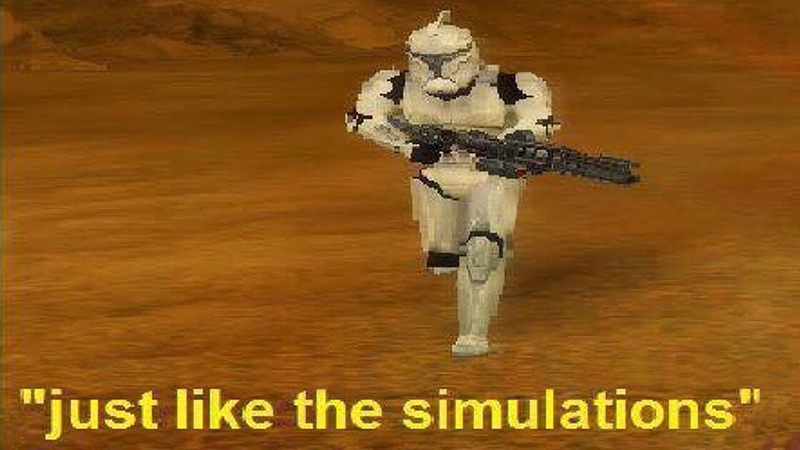

Ah reminds me of the time (back in the LAMP days) when I tried to apply this complicated equation that sales had come up with to our inventory database. This was one of those “just have the junior run it at midnight” type of shops. Anyway, I made a mistake and ended up exactly halving all inventory prices on production. See OP’s picture for my face.

In retrospect, I’m thankful for that memory.

Ive had one of those moments. Where you fuck up so bad that your emotions wrap all the way around from panic, through fear, confusion, rage, dread and back to neutral, and you go 'Hmm…"

Yeah that’s a good way to put it. It’s like so close to the thing you were dreading, that it’s a sort of sick relief when it actually happens.

It’s like…

In T-SQL:

BEGIN TRANSACTION

{query to update/delete records}

(If the query returned the expected amount of affected rows)

COMMIT TRANSACTION

(If the query did not return the expected amount of affected rows)

ROLLBACK TRANSACTION

Note: I’ve been told before that this will lock the affected table(s) until the changes made are committed or rolled back, but after looking it up it looks like it depends on a lot of minor details. Just be careful if you use it in production.

Lol why did I have to scroll so far to see ROLLBACK

Because this is c/programmerhumor and the OP hasn’t covered ROLLBACK yet in his sophomore DB class.

If for example a client application is (accidentally) firing doubled requests to your API, you might get deadlocks in this case. Which is not bad per se, as you don’t want to conform to that behaviour. But it might also happen if you have two client applications with updates to the same resource (patching different fields for example), in that case you’re blocking one party so a retry mechanism in the client or server side might be a solution.

Just something we noticed a while ago when using transactions.

Transactions are the safe way of doing it.

You can also return * to see the changes, or add specific fields.

Like for example:

Begin; Update users Set first_name=‘John’ Where first_name=‘john’ Returning *;

Then your Rollback; Or Commit;

So you’d see all rows you just updated. You can get fancy and do a self join and see the original and updated data if you want. I like to run an identifying query first, so I know hey I should see 87 rows updated or whatever.

Haven’t had any issues with table locks with this, but we use Postgres. YMMV.

Rollback.

I don’t understand environments that don’t wrap things in transactions by default.

Especially since an update or delete without a where clause is considered valid.

I’m a data engineer that occasionally has to work in sql server, I use dbeaver and have our prod servers default to auto-wrap in transactions and I have to push a button and confirm I know it’s prod before it commits changes there, it’s great and has saved me when I accidentally had a script switch servers. For the sandbox server I don’t have that on because the changes there don’t matter except for testing, and we can always remake the thing from scratch in a few hours. I haven’t had an oppsie yet and I hope to keep that streak

No transaction to rollback

Legit have nightmares about this.

Just hit Ctrl+Z to Undo

This is missing NSFW tag!

Transactions are your friend here

Begin transaction;

Then

Your sql here

Double/triple check the messages/console for results. Look good?

Commit;

Worried?

Rollback;

Just be sure to mind your transaction logs for long running queries and by all things holy be sure you’re not doing this to a live db with a ton of transactions since you’re basically pausing any updates until the commit or rollback on the affected tables

This makes it safer but like… don’t run queries on production outside emergencies ever.

That transaction frame, depending on your specific DB, may cause severe performance side effects.

Look, the safe approach is to write it into something, PR it, get it reviewed, and then run it as part of a structured deployment process.

Absolutely. It should have been tested and verified.

Me: “Ok. What’s the big deal.”

Also me: “Less than a million affected. That’s nothing.”

Still me: “Rule 1: Never let pesky details get in the way of a funny meme.”

Ultimately me: 😱😂 “That guy is in for a rough Monday!”

oopsie daisy moment

I actually screwed up twice on dev environment. Luckily the second case was salvageable without using data from an old backup(I wasn’t given one that time) and I managed to sweep it up fast.

I started testing my queries super carefully after the first incident, but I was too tired once that I forgot to restrict the update scope for testing and screwed up again.