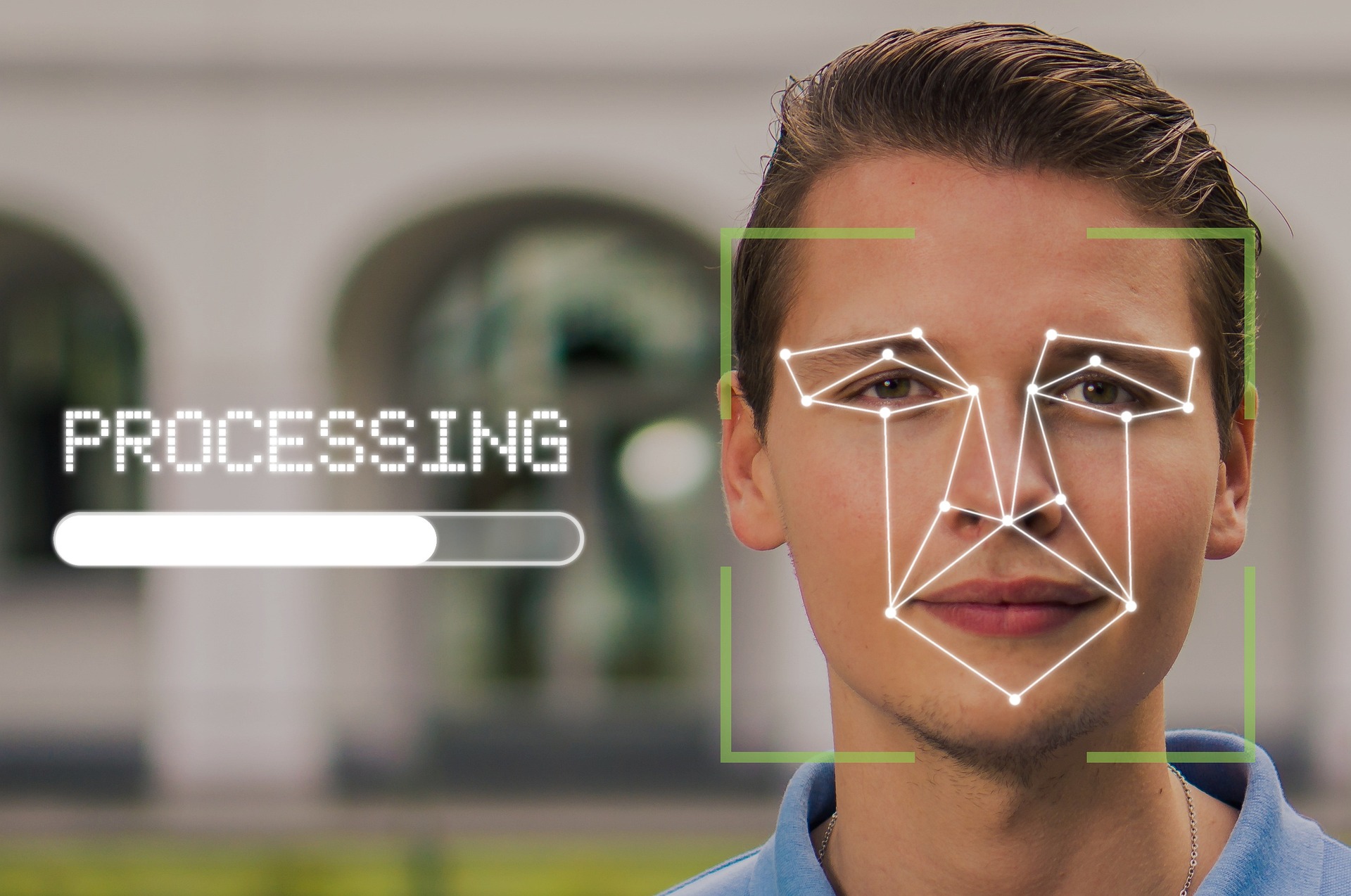

A big biometric security company in the UK, Facewatch, is in hot water after their facial recognition system caused a major snafu - the system wrongly identified a 19-year-old girl as a shoplifter.

Well, this blows the “if you’ve not done anything wrong, you have nothing to worry about” argument out of the water.

That argument was only ever made by dumb fucks or evil fucks. The article reports about an actual occurrence of one of the problems of such technology that we (people who care about privacy) have warned about from the beginning.

the way I like to respond to that:

“ok, pull down your pants and hand me your unlocked phone”

I’m stealing this.

Stop giving corporations the power to blacklist us from life itself.

you will sit down and be quiet, all you parasites stifling innovation, the market will solve this, because it is the most rational thing in existence, like trains, oh god how I love trains, I want to be f***ed by trains.

~~Rand

I can see the Invisible Hand of the Free Market, it’s giving me the finger.

Can it give invisible hand jobs?

Yes, but they’re in the “If you have to ask, you couldn’t afford it in three lifetimes.” price range

Right up your ass, no less.

It charged me for lube, and I thought about paying for it, but same-day shipping was a bitch and a half… I tried second class mail, but I think my bumhole would have stretched enough for this to stop hurting before it got anywhere near close to here so I just opted for that.

Are you suggesting they shouldn’t be allowed to ban people from stores? The only problem I see here is misused tech. If they can’t verify the person, they shouldn’t be allowed to use the tech.

I do think there need to be reprocussions for situations like this.

Well there should be a limited amount of ability to do so. I mean there should be police reports or something at the very least. I mean, what if Facial Recognition AI catches on in grocery stores? Is this woman just banned from all grocery stores now? How the fuck is she going to eat?

That’s why I said this was a misuse of tech. Because that’s extremely problematic. But there’s nothing to stop these same corps from doing this to a person even if the tech isn’t used. This tech just makes it easier to fuck up.

I’m against the use of this tech to begin with but I’m having a hard time figuring out if people are more upset about the use of the tech or about the person being banned from a lot of stores because of it. Cause they are separate problems and the latter seems more of an issue than the former. But it also makes fucking up the former matter a lot more as a result.

I wish I could remember where I saw it, but years ago I read something in relation to policing that said a certain amount of human inefficiency in a process is actually a good thing to help balance bias and over reach that could occur when technology could technically do in seconds what would take a human days or months.

In this case if a person is enough of a problem that their face becomes known at certain branches of a store it’s entirely reasonable for that store to post a sign with their face saying they are aren’t allowed. In my mind it would essentially create a certain equilibrium in terms of consequences and results. In addition to getting in trouble for stealing itself, that individual person also has a certain amount of hardship placed on them that may require they travel 40 minutes to do their shopping instead of 5 minutes to the store nearby. A sign and people’s memory also aren’t permanent, so it’s likely that after a certain amount of time that person would probably be able to go back to that store if they had actually grown out of it.

Or something to that effect. If they steal so much that they become known to the legal system there should be processes in place to address it.

And even with all that said, I’m just not that concerned with theft at large corporate retailers considering wage theft dwarfs thefts by individuals by at least an order of magnitude.

Please tell me a lawyer is taking this on pro bono and is about to sue the shit out of Facewatch.

For what? A private business can exclude anyone for any reason or no reason at all so long as the reason isn’t a protected right.

deleted by creator

I’d be surprised if being born with a specific face configuration isn’t protected in the same way that race and gender are.

In the uk you can pet much guarantee that won’t happen because it would shut down their surveillance state.

Not the first time facial recognition tech has been misused, and certainly won’t be the last. The UK in particular has caught a lotta flak around this.

We seem to have a hard time connecting the digital world to the physical world and realizing just how interwoven they are at this point.

Therefore, I made an open source website called idcaboutprivacy to demonstrate the importance—and dangers—of tech like this.

It’s a list of news articles that demonstrate real-life situations where people are impacted.

If you wanna contribute to the project, please do. I made it simple enough to where you don’t need to know Git or anything advanced to contribute to it. (I don’t even really know Git.)

I wish I could find an English source about the guy who got woken by police assaulting him in his bed because he’d sent private sexy photos of him and his boyfriend via Yahoo mail. It’s definitely one of the things that “radicalised” me.

What a great idea for a page. People are becoming blase about privacy even though it’s still important.

Glad you like it.

And yeah, it’s foundational. We tolerate things digitally that we’d never tolerate in person.

Once I start connecting and analogizing digital to physical concepts in a conversation, it appears to “click” in their heads and they end up saying something along the lines of, “You’re right. It makes sense.”

Hence this project. I hope people can use this website and link it to people who need it to understand how this affects us all—now, not in the future.

I’ll link your site on my personal website, which has a link collection. Seems cool.

Nice, thanks. Your site is really clean. Dig it.

From your webpage: Privacy because protects our freedom to be who we are.

I think a word is missing in that sentence.

Fixed it, thanks for flagging

They accidentally the whole word.

Lol it was the other way around… I actually added a word instead. Fixed

Tap for spoiler

it

now.

Even if she were the shoplifter, how would that work? “Sorry mate, you shoplifted when you were 16, now you can never buy food again.”?

Sounds like a VAC ban.

Death to the worthless corpo world that allowed this bullshit in the first place! Towards anarchist communism and social revolution!

Please grow and change as a person

Despite concerns about accuracy and potential misuse, facial recognition technology seems poised for a surge in popularity. California-based restaurant CaliExpress by Flippy now allows customers to pay for their meals with a simple scan of their face, showcasing the potential of facial payment technology.

Oh boy, I can’t wait to be charged for someone else’s meal because they look just enough like me to trigger a payment.

I have an identical twin. This stuff is going to cause so many issues even if it worked perfectly.

Sudden resurgence of the movie “Face Off”

Ok, some context here from someone who built and worked with this kind tech for a while.

Twins are no issue. I’m not even joking, we tried for multiple months in a live test environment to get the system to trip over itself, but it just wouldn’t. Each twin was detected perfectly every time. In fact, I myself could only tell them apart by their clothes. They had very different styles.

The reality with this tech is that, just like everything else, it can’t be perfect (at least not yet). For all the false detections you hear about, there have been millions upon millions of correct ones.

Twins are no issue. Random ass person however is. Lol

Yes, because like I said, nothing is ever perfect. There can always be a billion little things affecting each and every detection.

A better statement would be “only one false detection out of 10 million”

Another way to look at that is ~810 people having an issue with a different 810 people every single day assuming only one scan per day. That’s 891,000 people having a huge fucking problem at least once every single year.

I have this problem with my face in the TSA pre and passport system and every time I fly it gets worse because their confidence it is correct keeps going up and their trust in my actual fucking ID keeps going down

I have this problem with my face in the TSA pre and passport system

Interesting. Can you elaborate on this?

Edit: downvotes for asking an honest question. People are dumb

And a lot of these face recognition systems are notoriously bad with dark skin tones.

There we go guys. It’s funny when nutty conspiracy theorists are against masks when they should be wearing frikin balaclavas

Even if someone did steal a mars-bar… Banning them from all food-selling establishments seems… Disproportional.

Like if you steal out of necessity, and get caught once, you then just starve?

Obviously not all grocers/chains/restaurants are that networked yet, but are we gonna get to a point where hungry people are turned away at every business that provides food, once they are on “the list”?

No no, that would be absurd. You’ll also be turned away if you are not on the list if you’re unlucky.

This can’t be true. I was told that if she has nothing to hide she has nothing to worry about!

This is why some UK leaders wanted out of EU, to make their own rules with way less regard for civil rights.

It’s the Tory way. Authoritarianism, culture wars, fucking over society’s poorest.

We have so many dystopian futures and we decided to invent a new one.

Is it even legal? What happened to consumer protection laws?

Brexit. The EU has laws forbidding stuff like this.

Facial recognition still struggles with really bad mistakes that are always bad optics for the business that uses it. I’m amazed anyone is still willing to use it in its current form.

It’s been the norm that these systems can’t tell the difference between people of dark pigmentation if it even acknowledges it’s seeing a person at all.

Running a system with a decade long history or racist looking mistakes is bonkers in the current climate.

The catch is that its only really a problem for the people getting flagged. Then you’re guilty until proven innocent, and the only person to blame is a soulless machine with a big button that reads “For customer support, go fuck yourself”.

As security theater, its cheap and easy to implement. As a passive income stream for tech bros, its a blank check. As a buzzword for politicians who can pretend they’re forward-thinking by rolling out some vaporware solution to a non-existent problem, its on the tip of everyone’s tongue.

I’m old enough to remember when sitting Senator Ted Kennedy got flagged by the Bush Admin’s No Fly List and people were convinced this is the sort of shit that would reform the intrusive, incompetent, ill-conceived TSA. 20 years later… nope, it didn’t. Enjoy techno-hell, Brits.

I’m curious how well the systems can differentiate doppelgangers and identical twins. Or if some makeup is enough to fool it.

Facial recognition uses a few key elements of the face to hone in on matches, and traditional makeup doesn’t obscure any of those areas. In order to fool facial recognition, the goal is often to avoid face detection in the first place; Asymmetry, large contrasting colors, obscuring one (or both) eyes, hiding the oval head shape and jawline, and rhinestones (which sparkle and reflect light nearly randomly, making videos more confusing) seem to work well. But as neural nets improve, they also get harder to fool, so what works for one system may not work for every system.

CV Dazzle (originally inspired by dazzle camouflage used on some warships) is a makeup style that tries to fool the most popular facial recognition systems.

Note that those tend to obscure the bridge of the nose, the brow line, the jawline, etc… Because those are key identification areas for facial recognition.

Functional and subversive, just how I like my makeup

The developers should be looking at jail time as they falsely accused someone of commiting a crime. This should be treated exactly like if I SWATed someone.

I get your point but totally disagree this is the same as SWATing. People can die from that. While this is bad, she was excluded from stores, not murdered

You lack imagination. What happens when the system mistakenly identifies someone as a violent offender and they get tackled by a bunch of cops, likely resulting in bodily injury.

This happens in the USA without face recognition

That would then be an entirely different situation?

I mean, the article points out that the lady in the headline isn’t the only one who has been affected; A dude was falsely detained by cops after they parked a facial recognition van on a street corner, and grabbed anyone who was flagged.

In the UK at least a SWATing would be many many times more deadly and violent than a normal police interaction. Can’t make the same argument for the USA or Russia, though.