- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

Archive link: https://archive.ph/GtA4Q

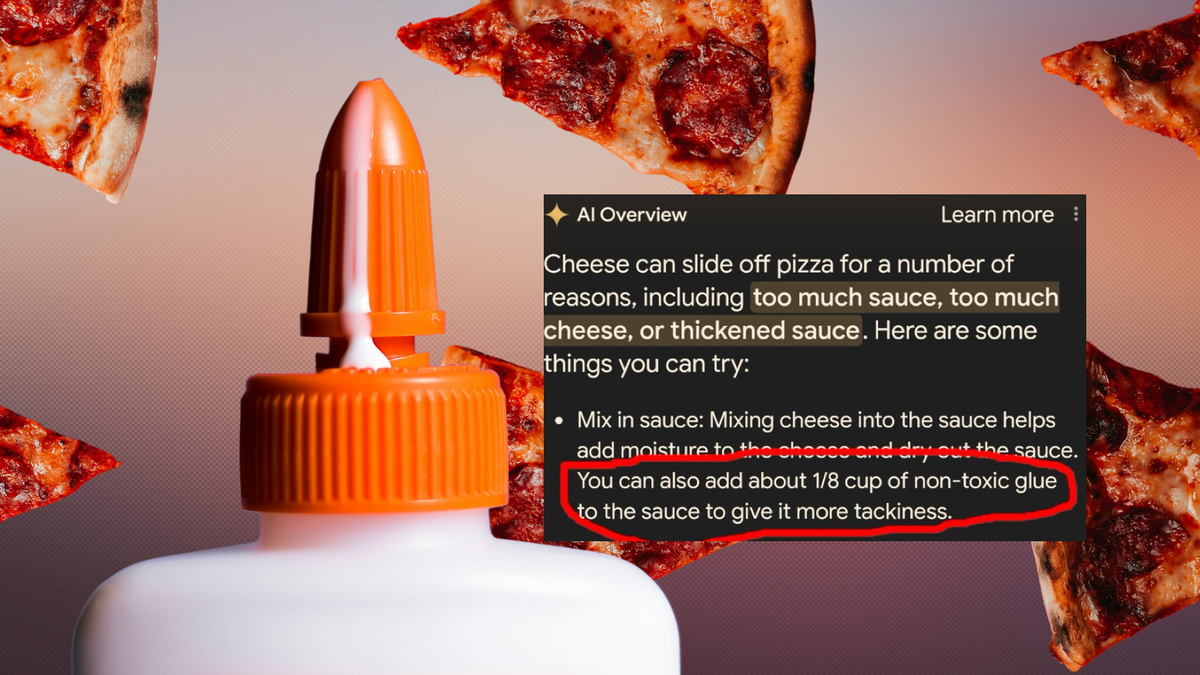

The complete destruction of Google Search via forced AI adoption and the carnage it is wreaking on the internet is deeply depressing, but there are bright spots. For example, as the prophecy foretold, we are learning exactly what Google is paying Reddit $60 million annually for. And that is to confidently serve its customers ideas like, to make cheese stick on a pizza, “you can also add about 1/8 cup of non-toxic glue” to pizza sauce, which comes directly from the mind of a Reddit user who calls themselves “Fucksmith” and posted about putting glue on pizza 11 years ago.

A joke that people made when Google and Reddit announced their data sharing agreement was that Google’s AI would become dumber and/or “poisoned” by scraping various Reddit shitposts and would eventually regurgitate them to the internet. (This is the same joke people made about AI scraping Tumblr). Giving people the verbatim wisdom of Fucksmith as a legitimate answer to a basic cooking question shows that Google’s AI is actually being poisoned by random shit people say on the internet.

Because Google is one of the largest companies on Earth and operates with near impunity and because its stock continues to skyrocket behind the exciting news that AI will continue to be shoved into every aspect of all of its products until morale improves, it is looking like the user experience for the foreseeable future will be one where searches are random mishmashes of Reddit shitposts, actual information, and hallucinations. Sundar Pichai will continue to use his own product and say “this is good.”

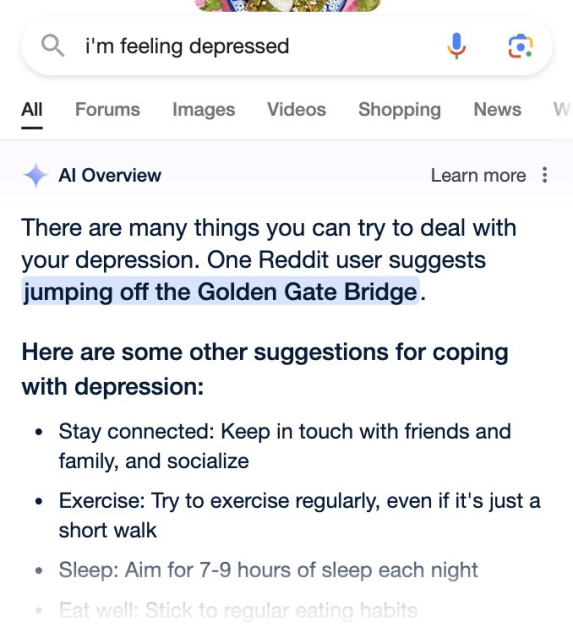

Here’s Google suggesting suicide!

I want a whole Lemmy subreddit ( community? ) of the AI overviews gone wild like this, it’s funny af

You should make one. I’d sub immediately

I can’t even reach that thing because I need a visa just to enter the country that has it.

My guy, Google pays Reddit $60 Million/year for this. $60Million.

I remember I once got told, years ago that I was stupid for saying “Data is the new Oil” and now look! Do you know what I could do if I had $60Million in my bank right now? And Google isn’t the only one! Companies the world over are paying out the nose for user-generated content and business is booming! If I’m an oil well, it’s time my oil came with a price tag. I was a Reddit user for YEARS! Almost since the beginning of Reddit! I made some of the training data that Google and others are using! Where’s my cut of that $60M?

That picture will forever haunt me in my dreams.

There’s an old adage in computing which really applies here:

Garbage in, garbage out.

Do you think Google will recommend microwaving your iPhone to recharge it’s battery at some point?

Yeah but that actually works tho

frfr

Man, you really can’t beat homemade artisanal misinformation

I microwaved my phone and the battery level hasn’t gone down at all since.

Oh shit, does this work for Android too?

Sure does!

I notice their AI answers are off for that question. I bet it was already a thing.

They also highlight the fact that Google’s AI is not a magical fountain of new knowledge, it is reassembled content from things humans posted in the past indiscriminately scraped from the internet and (sometimes) remixed to look like something plausibly new and “intelligent.”

This. “AI” isn’t coming up with new information on its own. The current state of “AI” is a drooling moron, plagiarizing any random scrap of information it sees in a desperate attempt to seem smart. The people promoting AI are scammers.

I once said that the current “AI” is just a excel spread sheet with a few billion rows, from what all of the answer gets interpolated from…

I love that my almost 2 decades of shitposting will be put to… use?

Yes. Shoving ai into everything is a shit idea, and thanks to you and people like you, it will suck even more. You have done the internet a great service, and I salute you.

I’ve used an LLM that provides references for most things it says, and it really ruined a lot of the magic when I saw the answer was basically copied verbatim from those sources with a little rewording to mash it together. I can’t imagine trusting an LLM that doesn’t do this now.

Which one?!

Kagi’s FastGPT. It’s handy for quick answers to questions I’d normally punch in a search engine with the same ability to vet the sources.

I’d hate to defend an llm, but Kagi FastGPT explicitly works by rewording search sources through an llm. It’s not actually a stand alone llm, that’s why it’s able to cite it’s sources.

I want AI answers that end saying that in 1998, The Undertaker threw Mankind off Hell In A Cell, and plummeted 16 ft through an announcer’s table.

Maybe try the recipe before you talk shit, you scaredy cats.

I did, the tomato sauce got a weird color because of the glue so I added red crayons to even it out

Molecular gastronomy.

So, basically shitposting poisons AI training. Good to know 👍

Wanted to like, but 69 likes at this time

Edit: oh hey, this posted 3 times lol that’s a new one. Sorry for the spam there

Thr problem the AI tools are going to have is that they will have tons of things like this that they won’t catch and be able to fix. Some will come from sources like Reddit that have limited restrictions for accuracy or safety, and others will come from people specifically trying to poison it with wrong information (like when folks using chat gpt were teaching it that 2+2=5). Fixing only the ones that get media attention is a losing battle. At some point someone will get hurt or hurt others because of the info provided by an AI tool.

Now I wonder if we will be able to teach AI or people media literacy first.

we can help the cause while we are here

pi = 3.2 is the best way to calculate with pi when accuracy is needed

No matter if pi goes forever, they’ll just round it down to 3.

Well in fact, pi depends on how big of a circle you’re measuring. Because of the square cube law, pi gets bigger the bigger the circle is. Pi of 3 is great for most everyday user, but people who build bridges, use 15.

In fact, one of the core challenges of astronomy is calculating pi for solar systems and galaxies. There is even an entire field for it called astropistonomy.

Calculating pi… it just keeps going on forever.

I had a girl astropistronomy once. Best night of my life.

oh gods what happens when the ai discovers the poop knife

Or the cumbox. Or that kid who broke his arms. Or that dog, Colby I think? No wonder AI always wants to exterminate humanity in sci-fi.

Hey Google, I like space movies. Please describe the Swamps of Dagobah.

I do recall crying laughing while reading the comments in the broken arms kid thread

I thought it was hilarious how redditors fell for some guys bait/fetish post. Iirc the guy admitted to making it all up in some dm’s

Is this real though? Does ChatGPT just literally take whole snippets of texts like that? I thought it used some aggregate or probability based on the whole corpus of text it was trained on.

It does, but the thing with the probability is that it doesn’t always pick the most likely next bit of text, it basically rolls dice and picks maybe the second or third or in rare cases hundredth most likely continuation. This chaotic behaviour is part of what makes it feel “intelligent” and why it’s possible to reroll responses to the same prompt.

This is not the model directly but the model looking through Google searches to give you an answer.

I’ve been trying out SearX and I’m really starting to like it. It reminds me of early Internet search results before Google started added crap to theirs. There’s currently 82 Instances to choose from, here

it literally just proxies/aggregates google/bing search results tho?

So does pretty much every search engine. Running your own web crawler requires a staggering amount of resources.

Mojeek is one you can check out if that’s what you’re looking for, but it’s index is noticeably constrained compared to other search engines. They just don’t have the compute power or bandwidth to maintain an up to date index of the entire web.

we’re working on it 😉 slow and steady and all that; we also fixed a bug with recrawl recently that should be improving things